Visual attention guided 360-degree video streaming

Chief investigator:

Cheng-Yu Yang, Jui-Chiu Chiang

Abstract:

- In recent years, with the development of multimedia video, smart phones and Virtual Reality (VR) headsets are around us. The video content that we watch every day is gradually developing in a variety of ways. For example, the 360-degree video is popular and many Youtuber and Facebook users upload the 360-degree videos in reporting their travel and in broadcasting the live events.

- To offer the immersive experience, storage and transmission bandwidth should be taken into account. The huge data amount of 360-degree videos makes it a challenge for efficient transmission and storage. In a limited bandwidth network, the playback of 360-degree video has some problems, such as freeze frames or poor quality of the demanded viewport, due to the huge amount of data. This could degrade the quality of user experience. Therefore, efficient compression and transmission of low-latency 360-degree image/video is important.

- Based on the human visual characteristics, this work propose techniques of 360-degree image coding and 360-degree video streaming. For the proposed image coding technique, the saliency map is used to modify the distortion during the RDO (rate-distortion optimization) process while it is used to predict the ROI (region of interest) for the proposed video coding technique. The experimental results show that up to 21.07% bitrate is achieved for the proposed image coding technique. For the 360-degree video streaming, this work allocates more resource for the ROIs during the rate control process to make sure a high quality of viewport demanded by the user is offered. Considering the variance of network bandwidth, MPEG-DASH is adopted and the proposed technique of 360-degree video streaming is implemented. Both subjective and objective experiments indicate the superiority of the proposed technique over the anchor scheme.

- Keywords: 360-degree Video Streaming, Saliency Map, Virtual Reality.

Motivation

- The huge data amount of 360-degree image/video brings the need of efficient coding for this kind of content(image/video size is between 4K and 18K).

- Since only part of the scene is watched by the observer, compressing the whole scene with equal quality is probably inefficient.

- In the video, only part of the content is played in the viewport, and transmitting full 360-degree video content may be wasteful.

- In this work, a visual attention guided coding technique is proposed.

Part I.360-degree image coding

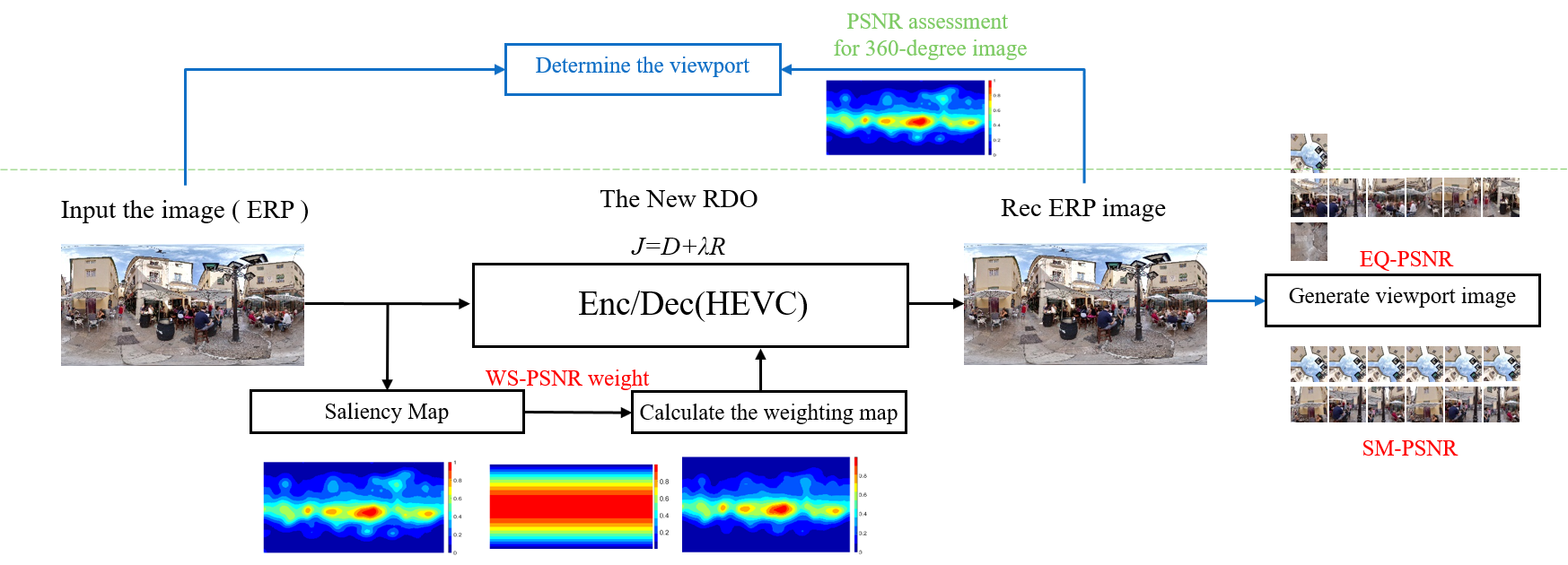

- The 360-degree image is encoded by HEVC and the decoder side render the viewport image based on the saliency map.

- Based on HEVC, the 360-degree image is compressed, and the RDO is modified according to the Saliency map and WSPSNR weights. Finally, the EQ-PSNR and SM-PSNR are used for quality evaluation.

Fig1. Architecture of 360-degree image compression technique

Part II. Video streaming

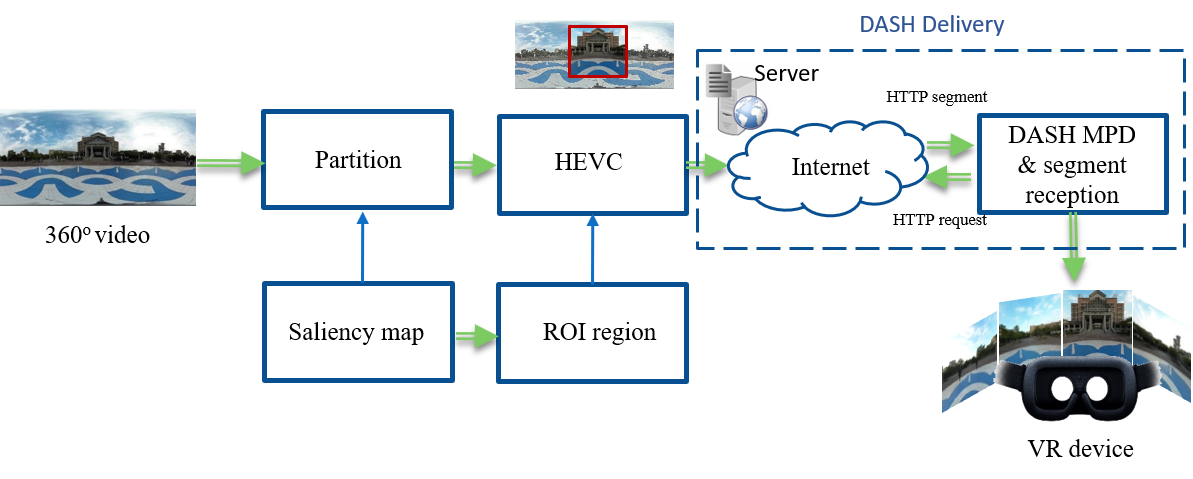

- The HEVC is used to encode 360-degree video and transmitted with MPEG-DASH technology.

- Continuous 360-degree video content is continuously transmitted based on server user behavior.

- In the limited bandwidth, there is a higher image quality for the viewport video that the user wants to view.

Fig2. 360-degree video compression streaming system architecture diagram

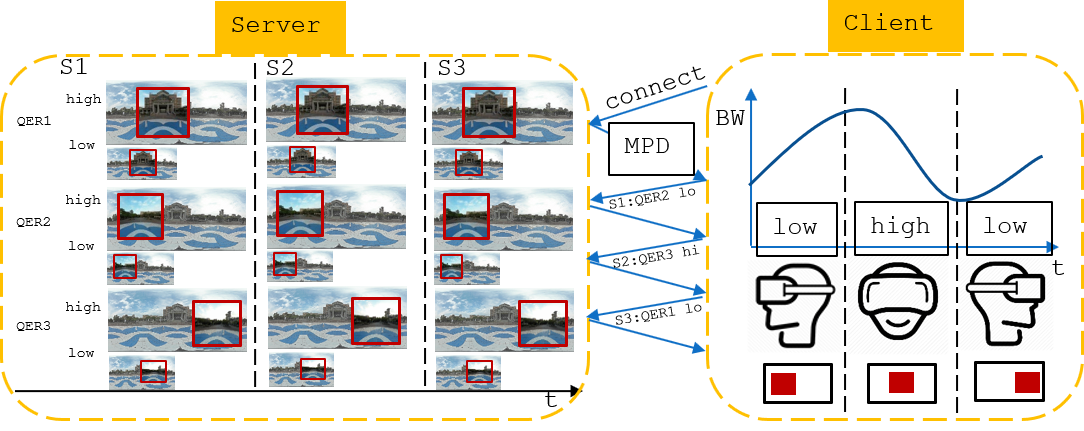

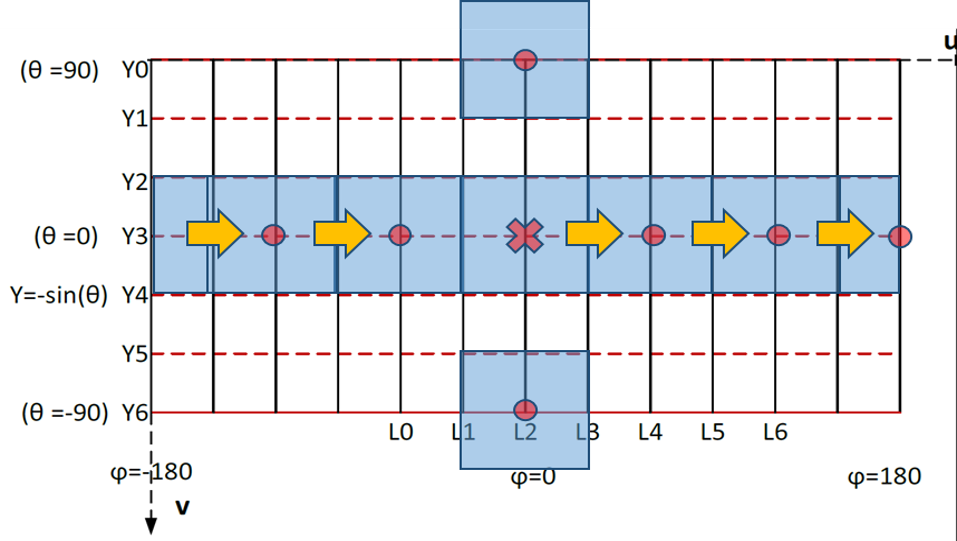

Based on ERP video streaming technology

- According to the Video Saliency map, select the user to view the Viewport location and perform ROI-based video coding.

Fig3. 360-degree video compression streaming system architecture diagram

Experimental results

Part I. 360 degree image compression experimental results

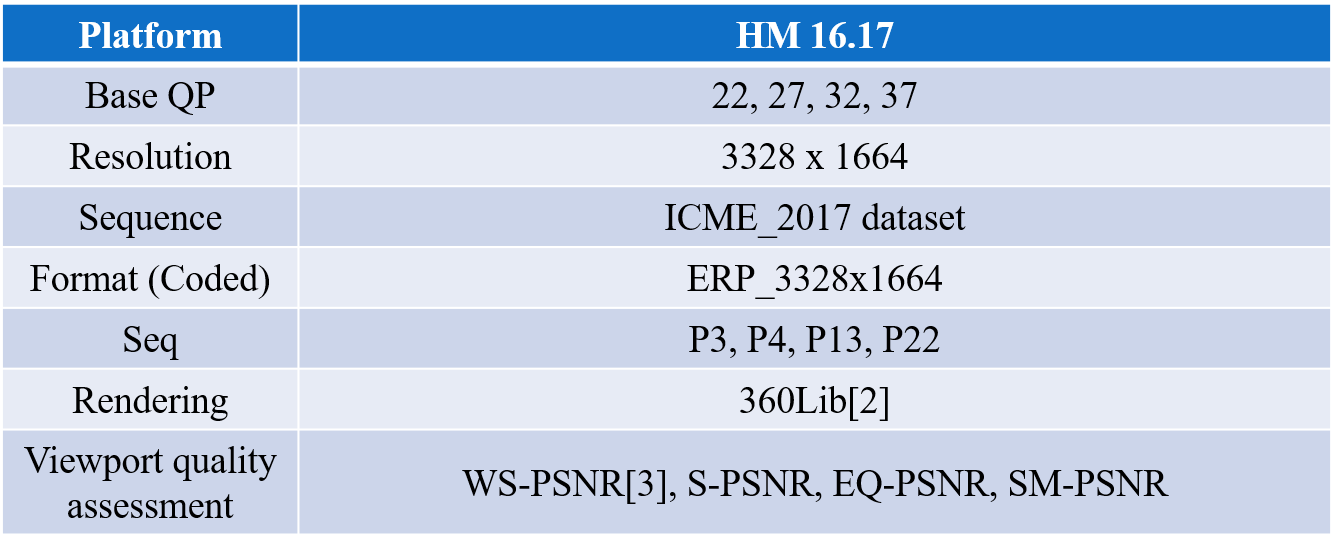

- In order to verify the proposed 360-degree image coding performance, we selected 4 sets of Sequece from the Dataset for experiments.

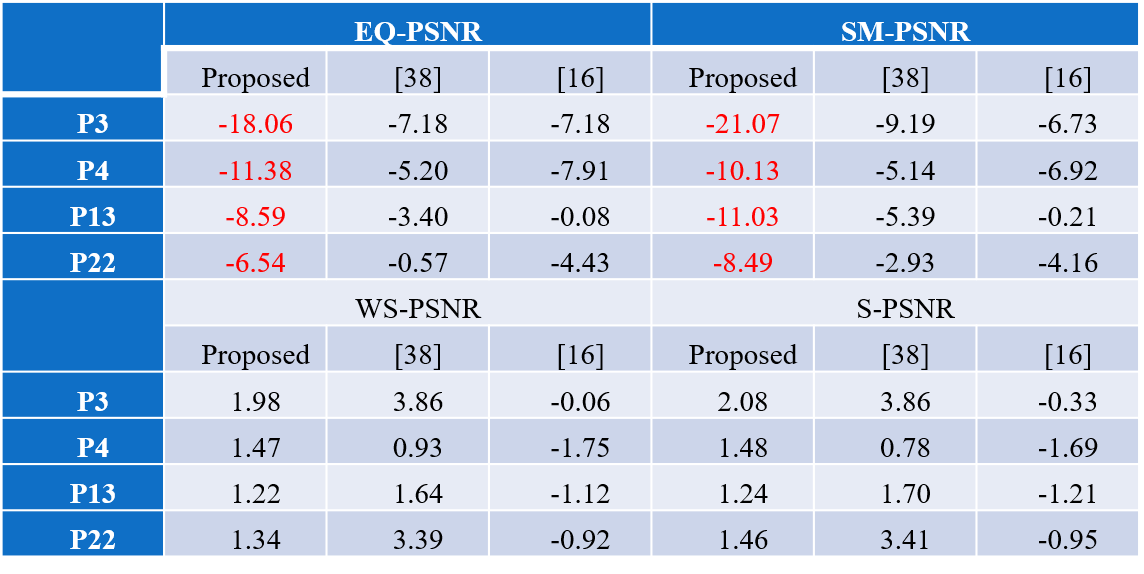

Table1. Architecture of 360-degree image compression technique

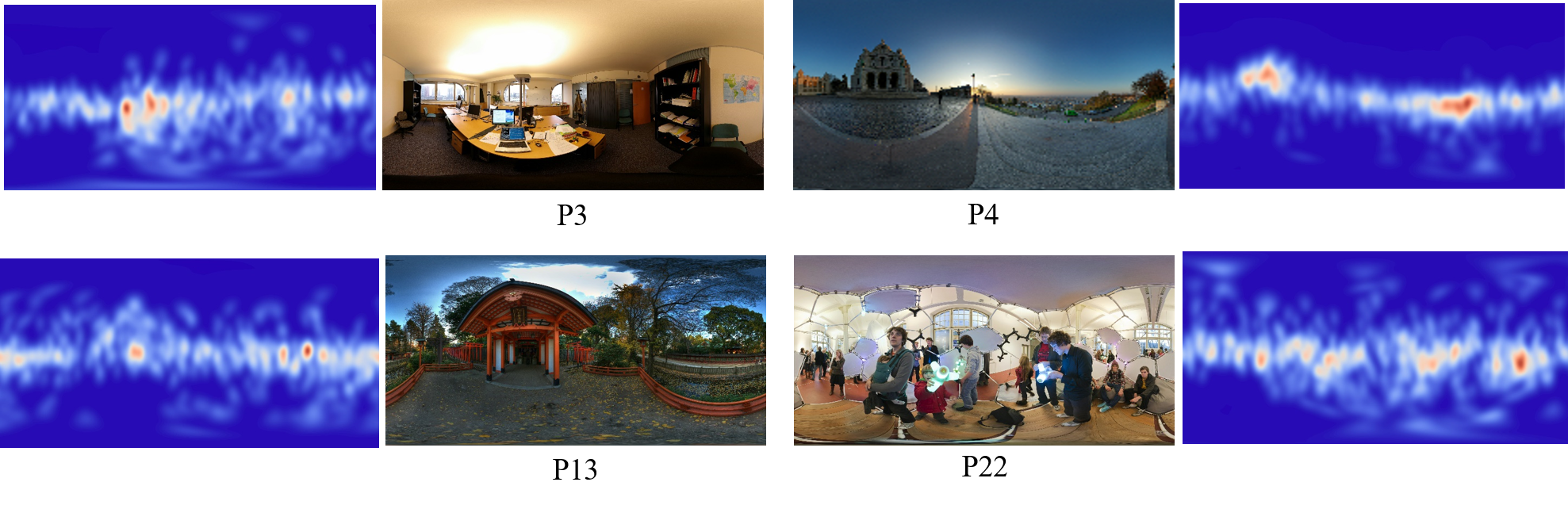

- Four sets of 360 degree test images and their corresponding Saliency maps in Dataset [4].

Fig4. Four sets of test images [4]

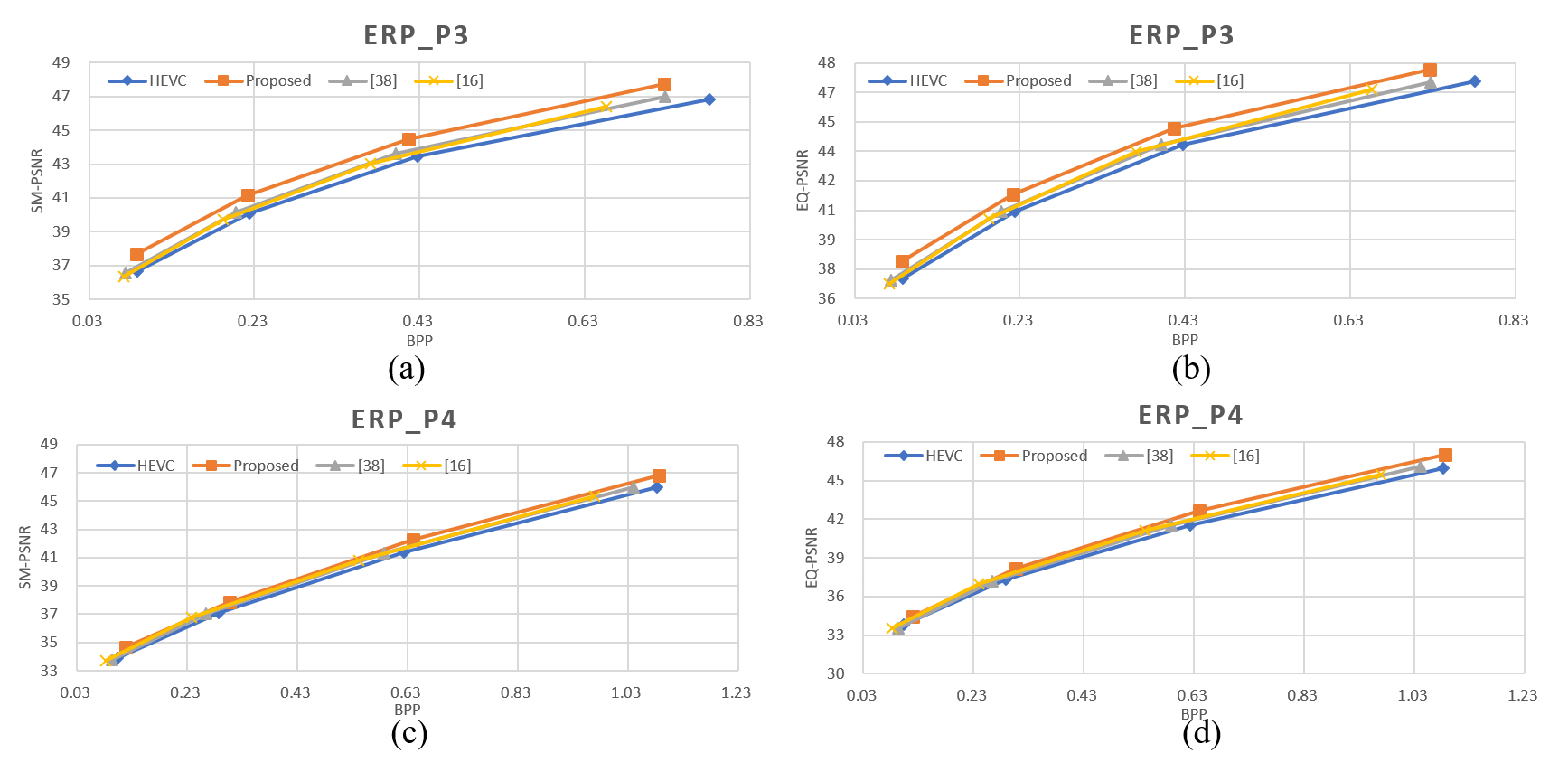

- Consider the user viewport for viewing, using EQ-PSNR and SM-PSNR as the comparison method.

(a) (b)

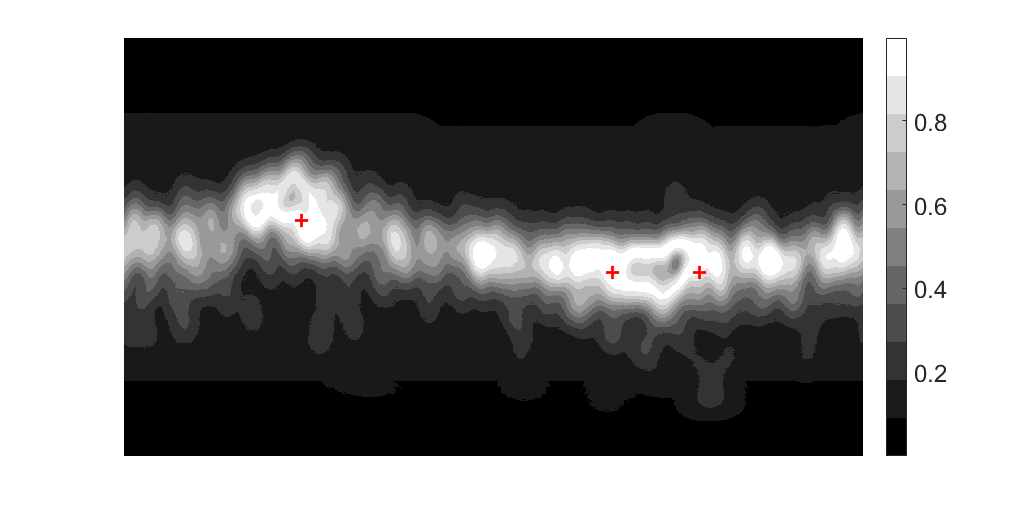

Fig5. 360-degree image quality assessment method

(a)Equator bias looks for the position of the center of the viewport (b)Find the top three Saliency areas

- Use BDBR [6] to compare different image coding benefits BD-rate (%).

Table2. 360 degree image compression RD-Performance

- 360 degree image compression result.

Fig6. P3 and P4(a)(c) SM-PSNR of the Saliency part (b)(d) EQ-PSNR of the equator

Part II. Experimental results of 360-degree video compression and streaming technology

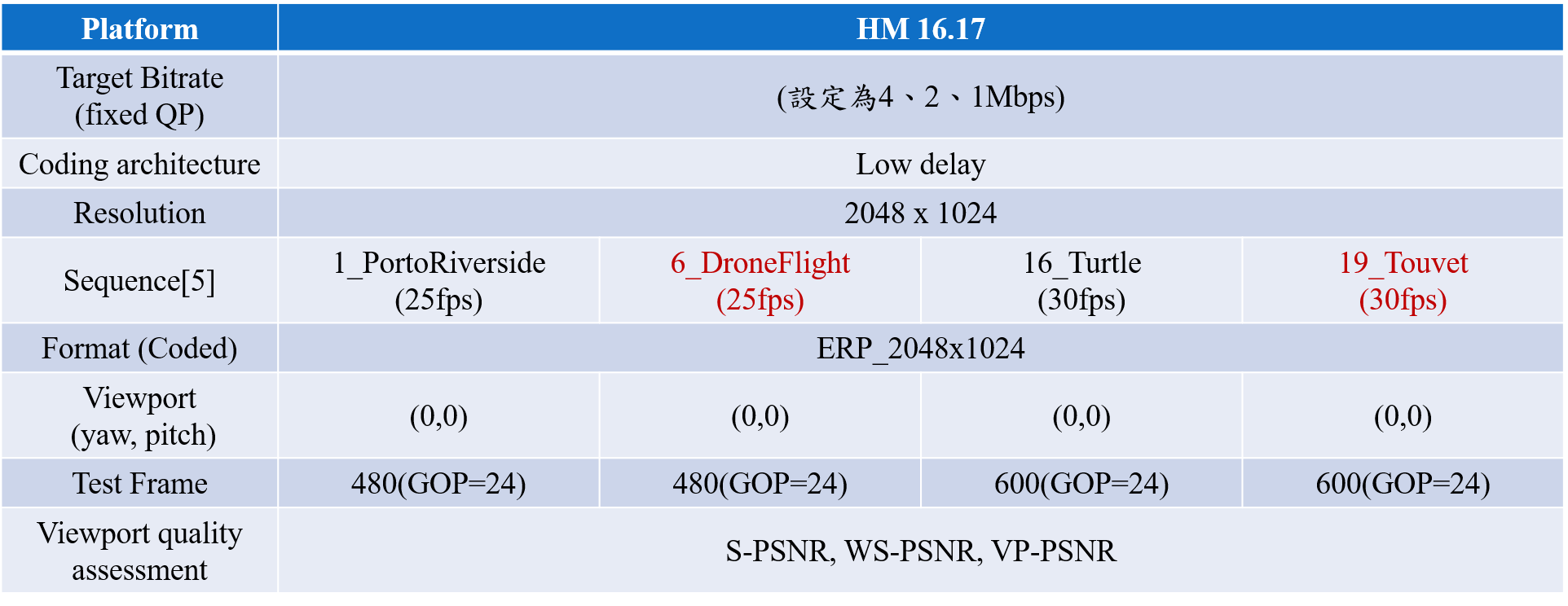

- The proposed method is based on the use of rate control in HEVC to control the bandwidth setting required for bit rate in network streaming.

Table3. 360 degree video compression experimental environment setting

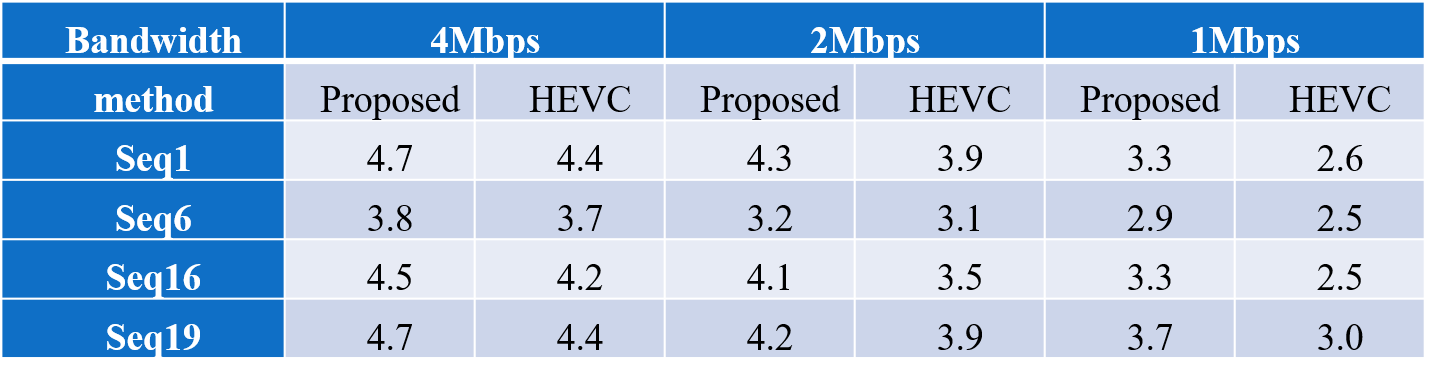

- The video contains the original video and the proposed method, and is continuously played to 10 subjects for viewing.

Table4. Subjective quality test score MOS of the proposed method

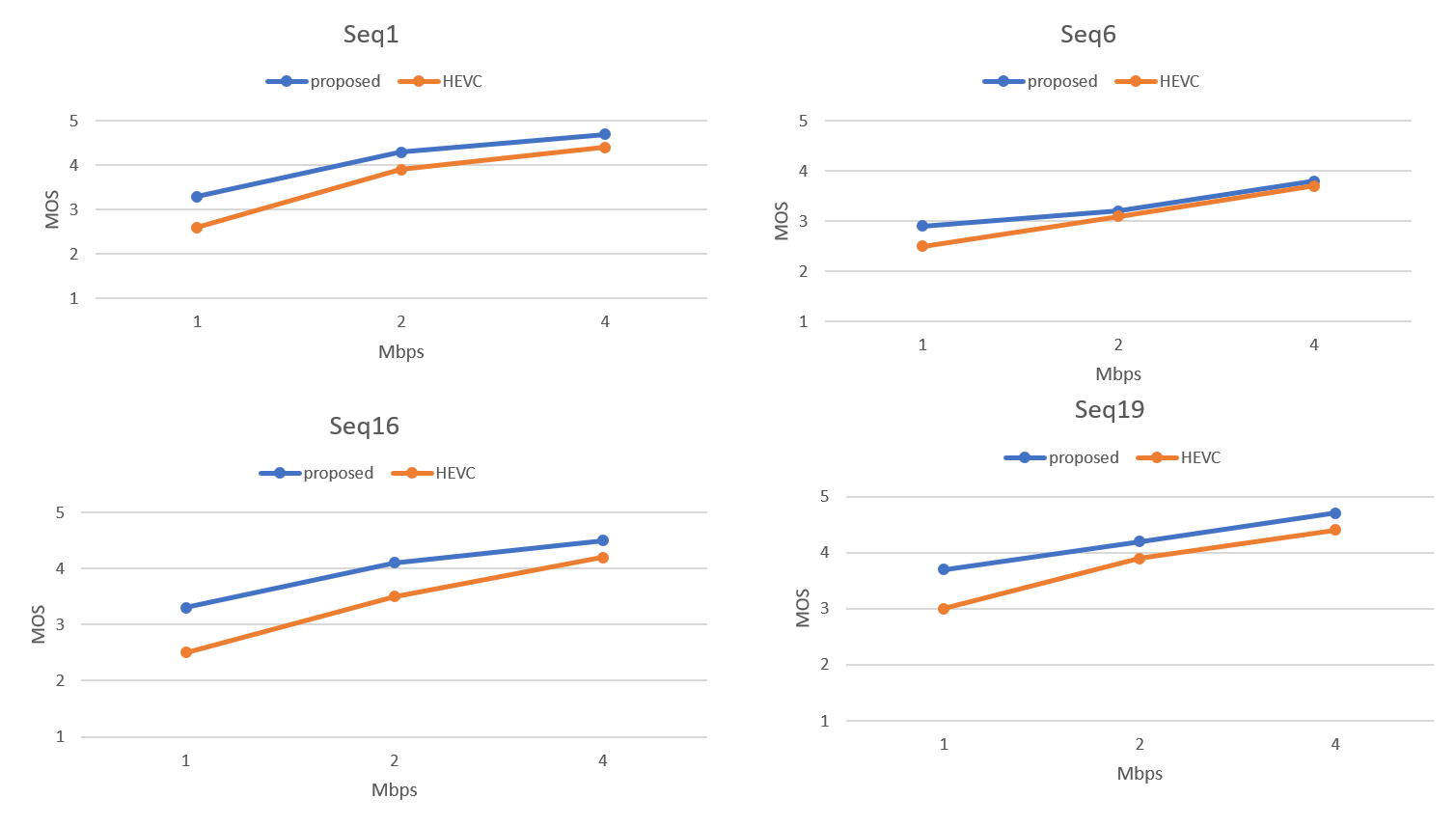

- Subjective score MOS made curve.

Fig7. R-M curve drawn by Sequense

Conclusion

First part

- We use Saliency map as a reference to optimize 360-degree image encoding performance.

- The experimental results show that the proposed 360-degree image compression technology can save up to 21.07% of the data volume.

The second part

- Based on human visual characteristics, we effectively allocate resources to the ROI area to ensure that users can view higher quality images.

- The results of both subjective and objective experiments show that the 360-degree video compression and streaming technology proposed in this paper is superior to the traditional method.